The Mirage of Objectivity in Market Research

Market Research markets itself as the objective backbone of decision‑making. Yet despite rigorous methodology, cognitive biases quietly distort how we collect, interpret, and visualize customer satisfaction. The industry isn’t dishonest; practitioners pursue insight with integrity.

But the very human tendency to simplify complexity gives rise to seductive visual narratives that may mislead. In a world awash with dashboards and metrics, interpreting customer satisfaction requires recognizing how visualization choices and data collection methods shape perceived reality. The question then becomes not whether Market Research tells truth or lies, but how easily truths are bent and what leaders must do to reclaim accuracy.

Market Research Is Built on Data, But Data Isn’t Neutral

How Cognitive Bias Distorts Data Collection

Market Research attempts to extract objective truths about customer satisfaction through structured methods such as surveys and interviews, aiming to translate subjective experiences into reliable data. Yet this process is never purely neutral.

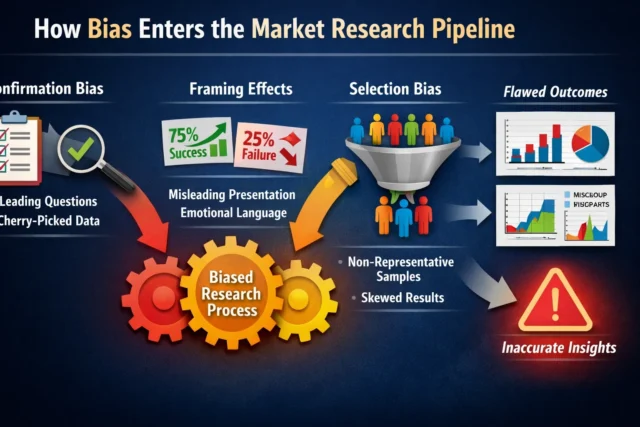

Cognitive biases subtly shape every stage of research design, data collection, and interpretation, influencing which questions are asked, how answers are framed, and which voices are ultimately heard. These effects occur unconsciously and persist even within rigorous methodologies:

- Confirmation bias: favoring questions or interpretations that validate prior assumptions or expected outcomes

- Framing effects: the order, context, and wording of questions significantly influence respondent perceptions and answers

- Selection bias: non-representative samples distort satisfaction indicators and overgeneralize limited viewpoints

These biases are well documented in behavioral science and affect even the most experienced researchers.

Visualization Choices Shape Interpretation

How data is visualized alters interpretation dramatically. The same customer satisfaction dataset can look positive or alarming depending on color, axis scale, or aggregation level. Visualization isn’t neutral; design choices create narratives. For example, a heatmap that uses red to denote slight dissatisfaction can trigger alarm even when scores are close to average.

Pie charts can exaggerate small differences through segment placement. Such aesthetic decisions influence decision-makers subconsciously, reinforcing assumptions or downplaying concerns. To counter this, visualization standards and intentional labeling must be implemented. Charts must clarify (not manipulate) customer perceptions. Only then can data visualization support, rather than misguide, strategic insight.

The Illusion of Customer Satisfaction: Overconfidence in Metrics

Misreading the Voice of the Customer

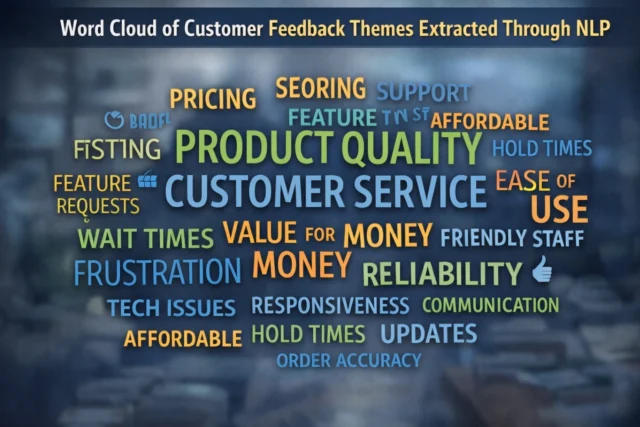

Survey scores and satisfaction indexes flatten complex human sentiment into numbers. Relying solely on numeric scales risks missing context. Emotional nuance; why customers feel satisfied or discontent, often hides between quantified scores. A net promoter score (NPS) might show an 8 out of 10 average but offer no explanation for the underlying hesitations. This is where qualitative data; like open-ended feedback, becomes vital.

Advanced tools, such as natural language processing, help extract recurring themes from customer narratives. IBM’s research on customer experience analytics emphasizes that AI can enhance, not replace, human interpretation by revealing emotional depth. Nuance matters more than numbers. (ibm.com)

Dashboards That Numb Rather Than Clarify

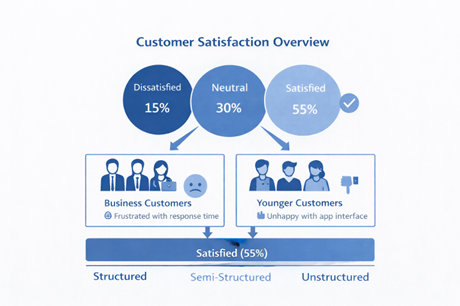

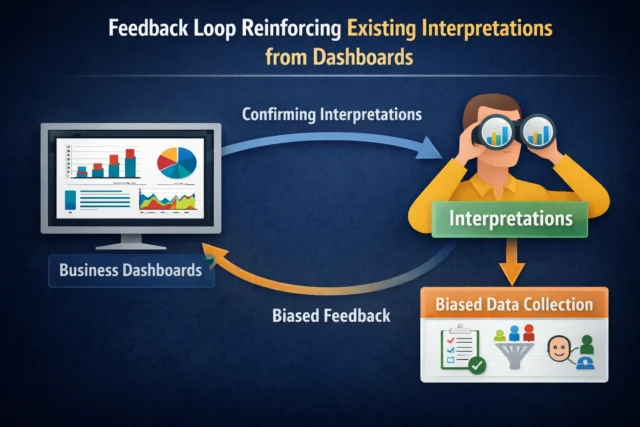

Excessive reliance on dashboards risks numbing stakeholders to nuance. A dashboard that reduces customer satisfaction to a single gauge or color can obscure problems that lurk beneath. This oversimplification often arises from the desire for real-time metrics, instant clarity at the cost of depth. But clarity without accuracy is dangerous. Decision-makers might be lulled into complacency by positive metrics while missing discontent brewing in key segments.

When dashboards summarize data into traffic lights or satisfaction thermometers, they hide the narrative behind the numbers. We must treat dashboards not as decision tools but as conversation starters prompting deeper inquiry into customer experience.

Often, dashboards become talking points rather than analytical tools. Leaders must demand transparency about what metrics represent and how they were derived.

AI in Market Research: Precision or Propaganda?

Machine Learning Reveals Hidden Patterns in Customer Feedback

Artificial Intelligence promises to elevate Market Research by identifying patterns beyond human detection. Machine learning models can analyze customer comments, behavioral data, and response trends to spot subtle shifts in satisfaction levels. For example, Deloitte insights on AI and customer experience describe how organizations use AI to drive operational efficiency and generate deeper customer understanding. (Deloitte)

AI doesn’t replace human judgment; it enhances it by:

- Detecting latent customer needs

- Highlighting trends that human analysts overlook

- Scaling analysis across massive datasets

But Who Trains the Model?

AI only reflects the data it’s trained on. If training data embodies existing biases, models can amplify them. This is a real risk in Market Research, where historical datasets may lack diversity or overrepresent certain customer segments. Issues like biased model outcomes have been explored in academic research on algorithmic fairness and visualization trust. (arxiv.org)

Mitigating this requires careful data governance, iterative retraining, and human oversight. Responsible AI isn’t optional; it’s essential for trustworthy Market Research.

The Feedback Loop of Misleading Insights in Market Research

When Flawed Data Drives Strategy

Incorrect interpretation of customer satisfaction can lead organizations astray. When teams take dashboard summaries at face value, entire strategies may be built on unstable foundations. High satisfaction scores, when not disaggregated by customer segment or geography, can hide dissatisfaction in critical areas. This misdirection leads companies to invest in the wrong initiatives or miss emerging pain points. For example, launching a loyalty campaign based on misleading metrics can waste resources while neglecting actual churn drivers.

Research from McKinsey emphasizes the importance of precision in customer segmentation to avoid such misfires. Market Research should never end with the dashboard, it starts there.

Common Misinterpretations and Strategic Consequences

| Misinterpretation in Market Research | Strategic Consequence |

| High overall satisfaction score masks churn in key segments | Reduced retention efforts where they matter |

| Ignoring open‑ended responses in favor of numeric scores | Failure to address emerging customer concerns |

| Over‑aggregating data across regions | Misallocation of marketing resources |

To avoid these pitfalls, organizations must revisit how they integrate quantitative scores with qualitative contexts.

The High Cost of Believing Your Own Dashboard

Dashboards can easily become echo chambers when visualizations are treated as neutral representations of reality rather than constructed interpretations of data. When teams uncritically trust charts, scores, and trends, existing assumptions are silently reinforced instead of challenged. Over time, this creates a false sense of certainty that masks uncertainty and marginal perspectives. To avoid this trap, leaders must actively interrogate what they see on the screen and question the narratives being reinforced:

- What assumptions underlie this chart?

- What voices are missing from the dataset?

- How might alternative visual models change interpretation?

The danger isn’t deceptive intent; it’s unexamined trust in visually persuasive data.

Toward Ethical Accuracy in Market Research

Design Thinking in Data Collection

Reducing bias begins at the earliest stages of survey and study design, long before data is collected or visualized. By integrating principles from design thinking, Market Research teams can better account for human behavior, context, and interpretation when measuring customer satisfaction. This approach emphasizes empathy, iteration, and continuous learning, helping researchers move beyond rigid frameworks toward more reliable insights.

Key practices include deliberate methodological choices that minimize cognitive distortions and surface deeper meanings:

- Using open-ended questions to capture nuance, emotion, and unexpected drivers of satisfaction

- Randomizing question order to reduce priming effects and response conditioning

- Conducting iterative pilot tests to refine instruments, wording, and logic

These methods ensure richer insights beyond simple numeric ratings.

Transparency in Visualization Standards

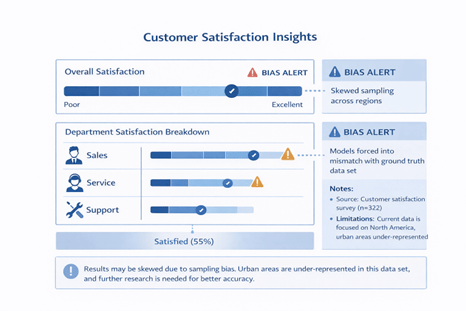

Just as accounting has clear rules, Market Research needs visualization standards. Transparency in how charts are built, including axis scaling, color coding, and segmentation logic, helps users understand the context behind the numbers. Visuals must disclose limitations: What’s missing? What’s assumed? Without such context, even well-intentioned graphics mislead. Consider introducing “bias alerts” on dashboards to flag oversimplified or underrepresented data points. McKinsey emphasizes the power of transparent storytelling in visual analytics, especially in customer experience. We need a code of ethics for dashboards, where accuracy trumps elegance. Without it, charts become illusions dressed as insights.

Standardizing visualization practices makes it easier for stakeholders to interpret metrics like customer satisfaction accurately, creating a shared language for truth.

Counterarguments: Skepticism vs. Innovation in Market Research

Critics Say Dashboards Oversimplify Reality

It’s true that dashboards often reduce complex datasets to digestible snapshots. Critics argue this fosters superficial decision‑making. This concern is valid; simplifying complex phenomena can strip context and nuance.

However, the solution isn’t to abandon dashboards but to design them better. Dashboards must invite curiosity, not complacency. Visual prompts can guide viewers to explore layers beneath the surface. Paired with training and literacy efforts, visualizations become tools for insight. As MIT Technology Review suggests, the future lies in “explainable data,” where dashboards are transparent about both structure and limits. Done right, dashboards illuminate. Done wrong, they sedate.

Advocates Claim AI Solves All Problems

Some proponents of AI suggest it eliminates bias and subjectivity. This is overly optimistic. AI can reveal patterns, but without thoughtful design, it may institutionalize hidden prejudices within data.

The nuanced position and the one supported here is that AI enhances Market Research when paired with human oversight and transparent methodology.

Comparison of Traditional and AI‑Enhanced Market Research

| Feature | Traditional Approach | AI‑Enhanced Approach |

| Data processing speed | Moderate | High |

| Ability to detect subtle patterns | Low | High |

| Bias risk | High human bias | Bias dependent on training data |

| Interpretation depth | Context dependent | Context enhanced with algorithms |

In Market Research, Truth Demands More Than Data

Customer satisfaction is not a simple number; it is a complex signal shaped by human bias, methodological shortcomings, and the seductive clarity of visual narratives. Market Research professionals are not dishonest, but they work within systems prone to errors in collection and interpretation. The antidote isn’t skepticism of intention; it’s rigorous design, transparency, and embracing AI responsibly.

Leaders must demand standardized visualization practices, invest in qualitative context, and use AI as a tool for illumination, not replacement of judgment. Only then will Market Research deliver truthful insights that guide strategy rather than comfort assumpt

References :

IBM: Using AI to Improve Customer Experience

Deloitte: How AI Is Transforming the Customer Experience

McKinsey: Personalizing the Customer Experience

MIT Technology Review: Explainable AI and Data Visualization

World Economic Forum: Ethical Data Design in Market Research

Harvard Business Review: The Business Case for Combining AI and Human Judgment

Market Research in 2030: Automated Intelligence or Automated Mistakes? – H-in-Q