The New Arms Race in Fin Tech

The explosion of FinTech over the last decade has transformed banking, payments, and lending but it has also created a new battleground: fraud. With the integration of AI, fraud detection has shifted from slow manual review to lightning‑fast, predictive analysis. Yet this transformation carries a cost: increasingly granular surveillance of customer behavior. The question emerges; does AI-powered fraud detection signal the end of cybercrime, or the end of privacy? The stakes could not be higher for financial institutions, consumers, and regulators alike.

The FinTech Revolution: Efficiency Meets Surveillance

How Fintech and AI Are Rewriting the Rules

FinTech’s digital-first architecture makes it ideal terrain for real-time AI fraud detection. Rather than batching transactions for nightly review, AI systems monitor each transaction instantaneously, comparing behavior patterns, device fingerprints, or behavioral biometrics. This real-time vigilance enables financial institutions to intercept suspicious activity as it happens, a feat impossible in purely manual systems.

From Innovation to Intrusion: A Double-Edged Sword

However, the same capabilities that enable fraud prevention can also erode personal privacy. Continuous monitoring can evolve into continuous surveillance, with institutions collecting far more data than necessary. The convenience and speed that FinTech promises might come at a cost: deep behavioral profiling and data retention that can outlive the original transaction.

Fraud Is Evolving and So Is FinTech

Cybercriminals in the Age of Financial Technology

As FinTech evolves, so too do the methods of fraudsters. New scams leverage synthetic identities, deepfakes, bot‑driven transactions, and coordinated AI-powered attacks. Traditional rule‑based fraud filters struggle to keep up, because fraud today hides behind volumes of seemingly normal-looking data. Machine learning must keep pace or risk being outmatched.

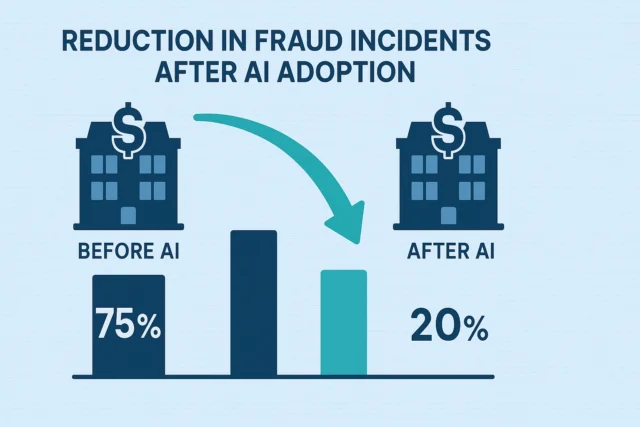

Counterattack: Fintech and AI Strike Back

AI-driven detection systems (trained on vast historical datasets and refined through continuous learning) can catch subtle anomalies that humans miss. According to research, machine learning techniques significantly outperform legacy fraud detection methods in identifying suspicious activities. (Nature) In this evolutionary arms race, Fin Tech backed by AI offers the only scalable defense.

Comparing Detection Speed & Accuracy

| Method | Detection Speed | False Positive Rate | Fraud Detection Accuracy |

| Traditional Manual Review | Hours to days | ~5–10% | Moderate |

| Legacy Rule‑Based Systems | Minutes to hours | ~8–15% | Good |

| AI / Machine Learning Systems | Real-time (ms–s) | ~2–5% | High (Nature) |

The Cost of Real-Time Intelligence: Privacy or Progress?

Why Privacy Advocates Are Sounding the Alarm

AI in FinTech often requires biometric data, behavioral profiling, device fingerprinting, and sometimes location history. These layers of data collection can blur the line between fraud prevention and invasive surveillance. Without explicit, ongoing consent and strict data handling policies, institutions risk violating consumer privacy.

AI’s Necessity for Securing Financial Technology Partners

From the perspective of financial technology partners that provide infrastructure, risk, compliance, and payment services, robust fraud detection is not optional. To preserve trust, minimize losses, and meet regulatory expectations, these partners invest heavily in AI-driven surveillance systems. The cost of not doing so would be mass fraud incidents, reputational damage, and potential collapse of user confidence.

The Regulatory Grey Zone in Fintech

Where Law Fails, AI Fills the Gap

Regulations often lag behind technological innovations. As financial institutions rapidly adopt AI for fraud detection, lawmakers struggle to define clear frameworks for data collection, retention, and transparency. In many jurisdictions, existing financial laws were drafted before data analytics or AI existed. Today, firms operate in a legal gray zone.

Do Fintech Partners Care About Oversight?

Financial technology partners seldom prioritize regulatory completeness over speed and scalability. They focus on maximizing fraud prevention ROI rather than building transparent data governance. As a result, the balance tends toward aggressive data collection and minimal disclosure, undermining long-term consumer trust and regulatory compliance.

Global Data Privacy Laws vs. Common AI Practices in FinTech

| Region / Law | Data Privacy Requirements | Common AI Practices in Fin Tech Fraud Detection | Privacy Risk Level |

| EU (GDPR) | Consent, Right to Erasure, Data Minimization | Real-time transaction monitoring, behavioral biometrics, data retention for years | High |

| US (Varied by state) | Limited federal regulation, some state laws (e.g. CCPA) | Device fingerprinting, behavioral scoring, continuous monitoring | Moderate to High |

| Emerging Markets | Often weak or non-existent privacy laws | Full surveillance for identity verification and fraud detection | Very High (OECD) |

When Algorithms Decide: Accountability in FinTech

Who’s Liable When AI Flags the Wrong Person?

AI systems are not infallible. False positives (legitimate transactions flagged as fraudulent) can freeze accounts or block a user’s access to funds. In many cases, the institution or fintech provider bears no legal accountability. Consumers may suffer economic harm or reputational damage with no recourse.

FinTech Partners Trust the Math

Many fintech firms place blind trust in AI outputs. In their view, statistical risk models are objective and superior to human judgment. While this reduces operational overhead and speeds decisions, it also sidelines human oversight. When AI errs, affected users may find themselves locked out without explanation.

Bulletproof or Blindfolded? FinTech’s Risky Dependency on AI

Automation Without Auditing Is a Ticking Time Bomb

Reliance on opaque AI where decision logic is not explainable, undermines trust and accountability. Without regular auditing, bias detection, and transparency, institutions risk systemic mistakes. Over time, technical glitches or model drift might produce widespread misclassifications, harming legitimate users en masse.

But the Alternative? Cybercrime Wins

Without AI, fraud would scale faster than human teams can respond. According to industry research, machine learning–based fraud detection remains the most effective defense against adaptive fraud strategies. (Nature) The choice is stark: accept some privacy intrusion or cede control of financial systems to criminals.

Cyberattacks in FinTech Firms with vs. without AI Implementation

| Institution Type | Implemented AI Fraud Detection | Annual Fraud Loss Rate | Customer Trust / Reputation Index |

| Traditional Bank without AI | No | High | Moderate–Low |

| Fin Tech Firm with AI | Yes | Low | High–Stable (McKinsey & Company) |

Regulation or Innovation: Which Should Lead in FinTech?

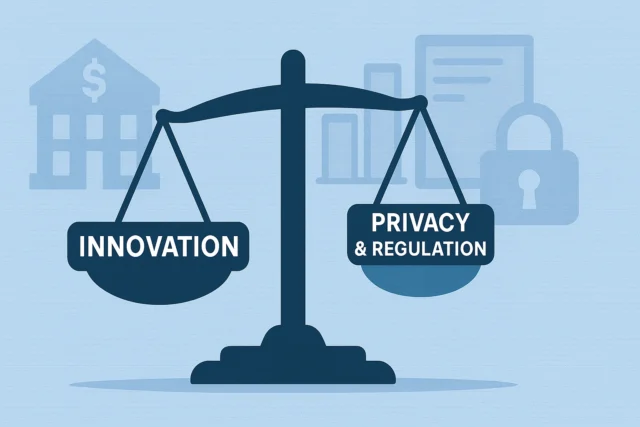

Regulation Risks Stifling FinTech Innovation

If regulators impose overly rigid privacy rules, FinTech companies may lose the ability to deploy real-time fraud detection. Compliance burdens could slow product rollouts, increase costs, and reduce competitiveness. Overregulation might hand an advantage back to traditional banks or even to illicit actors.

But Innovation Without Guardrails Undermines Trust

Unmonitored innovation can erode consumer trust and lead to major scandals. Especially as firms collect more sensitive data, any breach or misuse could trigger backlash, regulatory crackdowns, and reputational damage. The long-term viability of Fin Tech depends on balancing speed and security with transparency and accountability.

Final Verdict: AI in FinTech A Price Worth Paying

AI-powered fraud detection in Fin Tech represents a decisive leap forward against cybercrime. The alternative returning to manual reviews would leave financial systems vulnerable to adaptive, AI-driven fraud strategies that evolve far faster than human teams can react. AI offers speed, scale, and effectiveness. Yes, privacy concerns and regulatory gaps are real. But the risk of unchecked financial crime is greater. That said, the path forward demands transparency, explainable AI, rigorous auditing, and a commitment to data governance. When implemented responsibly, AI in Fin Tech is the cornerstone of a safer financial future.

References

- AI and Data in Financial Services – OECD

- Building the AI Bank of the Future – McKinsey & Company

- The Role of AI in Combating Financial Crime – Nature

- h-in-q.com