The AI Illusion That’s Crippling Modern Business

We are living through one of the most dangerous illusions in corporate technology today: the belief that a chatbot subscription equals an AI solution. Too many executives think that “AI imagine” means buying ChatGPT and layering a conversational interface on a simple use case. According to research tied to an MIT study revealing that ~95% of enterprise generative AI initiatives fail to deliver measurable business impact, this misinterpretation is costly and widespread. (Tom’s Hardware) Real AI is not a widget you drop into Microsoft Teams or Salesforce. It must be architected as a living platform that intertwines data readiness, workflows, security, integration, and culture; exactly the kinds of systemic capabilities that distinguish the few successful implementations from the many failures. (McKinsey & Company) This article dismantles the illusion and shows why superficial AI leads to failure, while systemic AI drives value.

The Grand Illusion: Why AI ≠ Chatbot

Hype vs. Reality: The Chatbot Misconception

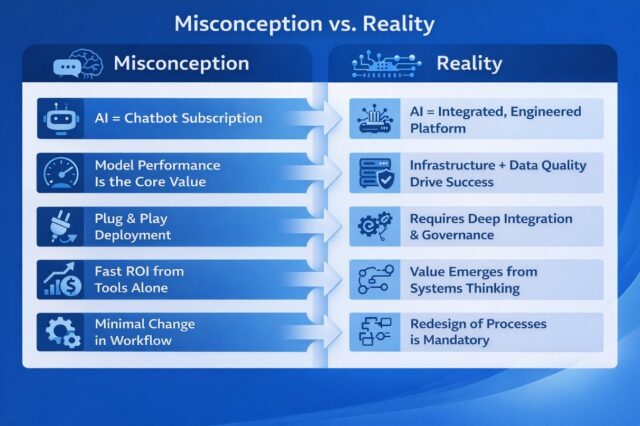

There’s a gulf between expectation and reality. Many companies treat AI like a chatbot subscription, licensing tools without grounding them in business problems or platforms. Generic AI tools may seem enticing, but superficial deployments fail to integrate with core systems, leading to the very stagnant results cited in enterprise failure research. (mlq.ai)

How Corporate Hype Warps AI Strategy

This hype isn’t organic; it’s reinforced by vendors and consultants who profit from selling quick solutions. Boards see the marketing narratives and assume implementation will be equally simple, only to find that enterprise AI requires complex engineering and organizational alignment. (McKinsey & Company)

Common Enterprise AI Misconceptions

|

2. The Hidden Reality: 95% of AI Isn’t “AI”

Software Engineering: The Real Backbone

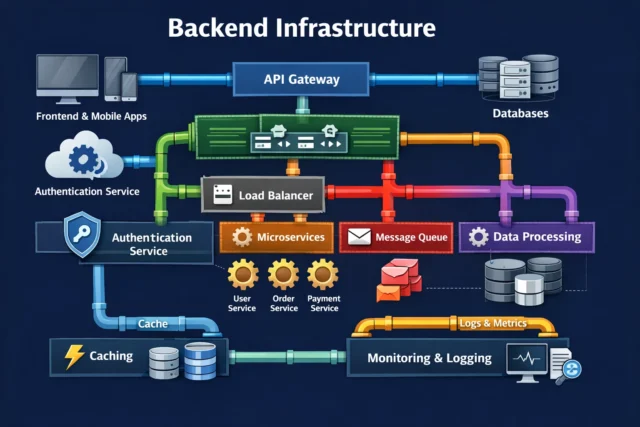

Despite popular narratives, 95% of the work in AI solutions is not about machine reasoning. It’s about software engineering, data orchestration, and lifecycle management; what industry experts classify as infrastructure and governance. (McKinsey & Company) AI models excel at reasoning, but a platform turns reasoning into business impact.

From Orchestration to Monitoring

AI doesn’t exist in a bubble. To be trustworthy and resilient, AI systems need orchestration layers that handle dependencies, scaling, and failure. Reliable monitoring dashboards visualize latency, data drift, and throughput in real time essential for business continuity.

Core Engineering Components

- Secure API gateways

- Scalable data pipelines

- Version control for models & data

- Observability and logging

- Deployment automation

The 5% That Matters: Rethinking the “AI Machine”

LLMs Are Engines, Not Whole Systems

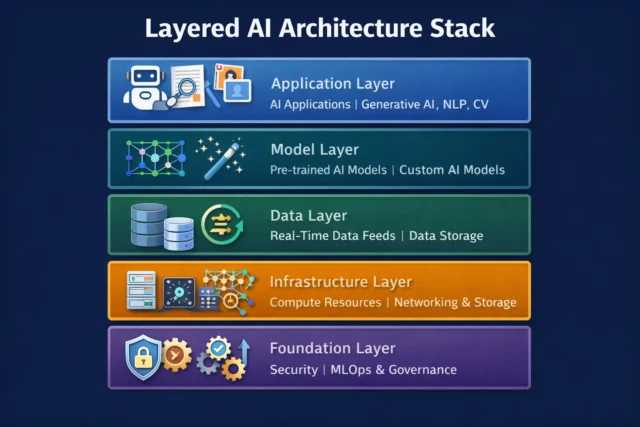

Large Language Models (LLMs) are seductive because they seem intelligent. But the model itself is only a small part (about 5%) of what makes systems succeed. Without context, workflows, and guardrails, an LLM is an engine on a test bench; impressive but unusable. (McKinsey & Company)

Business Logic Lives Outside the Model

Business logic, compliance, exception handling, and integration with existing systems are the layers that let LLMs facilitate real decisions instead of isolated outputs.

AI Machine vs. Full AI System

| Capability | LLM Only | Full AI System |

| Language understanding | ✔ | ✔ |

| Workflow execution | ✘ | ✔ |

| Security & Permission Policies | ✘ | ✔ |

| Data Integration | ✘ | ✔ |

| Compliance & Auditing | ✘ | ✔ |

Clean Data Is the Foundation, Not an Afterthought

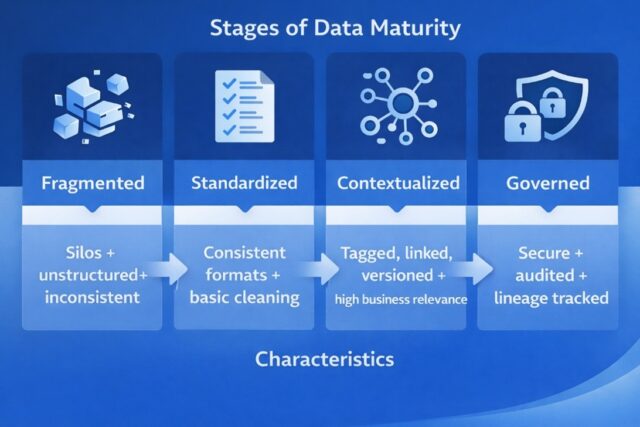

Data Quality Over Data Quantity

Many leaders fall into the “AI imagine” trap: assuming data size guarantees insight. In reality, clean, filtered, contextualized data is what powers reliable outcomes. Chaos in inputs leads to chaos in outputs, a lesson consistent with McKinsey findings that data excellence underpins transformation. (McKinsey & Company)

Contextualization Drives Value

Context gives meaning to information. A support transcript gains value only when tagged, linked to outcomes, and embedded in broader enterprise knowledge.

Enterprise Data Maturity for AI

Architecting Intelligence: Platforms, Not Plugins

Workflows Redesigned for Autonomy

AI cannot simply “bolt onto” existing business processes. To unlock autonomy, companies must redesign workflows so AI can interact at the right decision points, a principle highlighted in modern enterprise AI maturity frameworks. (McKinsey & Company)

Modular, Scalable Architecture Wins

A reliable platform must support:

- Scalable storage

- Pluggable compute

- Version tracking

- Automated retraining triggers

Platform vs. Plug‑In AI Models

| Feature | Plug‑In Tool | Platform Architecture |

| Scalability | Low | High |

| Governance | Weak | Embedded |

| Integration with Enterprise Systems | Patchy | Deep |

| Monitoring & Alerting | Optional | Standard |

| Human‑Machine Interaction Control | Basic | Advanced |

Governance and Security: The Non‑Negotiables

No Guardrails, No Trust

AI systems without proper governance are risky. According to IBM’s insights on AI data governance, ensuring the origin, sensitivity, and lifecycle of data is tracked and governed is essential before models are even deployed. (ibm.com) Security must be embedded at every layer.

Designing for Compliance and Accountability

Include mechanisms that:

- Track decisions and data lineage

- Enable human overrides

- Log outputs with context

- Restrict access based on roles

Governance Essentials

- Permission tiers & RBAC

- Immutable audit logs

- Model version control

- Regular compliance checks

- Ethical review boards

Adoption and Culture: Where AI Really Lives

AI Isn’t Adopted It’s Absorbed

You can’t deploy AI and walk away. Adoption depends on organizational culture, cross‑functional collaboration, and human‑in‑the‑loop design. Research frameworks for AI maturity (e.g., McKinsey) emphasize that adoption and change management are core pillars of enterprise success. (McKinsey & Company)

Human‑In‑The‑Loop Is Not Optional

Humans must be integrated for quality checks, validation, and continuous learning loops. Without tight coupling of human and machine roles, AI becomes a curiosity.

Adoption Best Practices

- Internal AI champions

- Tailored training paths

- Transparent AI decision reporting

- Feedback loops for improvement

8. Stop Buying Tools Start Building Systems

Tool Stack Culture Destroys Value

Measuring AI readiness by subscriptions purchased is dangerous. Tools are components, not architecture. Organizations that stack licenses yet ignore systemic thinking typically see sparse ROI and short‑lived initiatives.

System Thinking Is a Strategic Advantage

Leading firms like IBM’s watsonx platform show what a holistic AI architecture can look like, combining data management, model governance, and deployment tools into one enterprise framework. (Wikipedia)

Strategic Shifts That Drive AI Success

- Invest in platforms, not point tools

- Align KPIs to business outcomes

- Appoint product owners, not just project managers

- Build for evolution, not demos

- Treat AI like infrastructure, not an experiment

AI Is a System, Not a Shortcut

AI is among the most transformative technologies of our time, but only for those who build it right. The 95% of projects that fail do so not because the technology is flawed, but because leaders mistake AI for a tool instead of a comprehensive engineered system. Real AI success requires clean contextual data, platform‑first infrastructure, tight governance, redesigned workflows, and seamless human‑machine integration.

Chatbot subscriptions and flashy demos are features. When enterprises stop chasing tools and start building platforms, AI becomes a strategic engine of value. The future belongs to those who engineer AI holistically not superficially.

References

- Why So Many High-Profile AI Projects Fail (MIT Sloan)

- Charting a Path to the AI-Driven Enterprise (McKinsey)

- Data Governance for AI: The New Frontier (IBM)

- Enterprise AI Adoption Maturity Report (Deloitte)

- What Is IBM Watsonx? (Wikipedia)

- Automating Data Pipelines for Real AI Impact