Responsible AI in Theory, Power Politics in Practice

Responsible AI has gone from visionary ideal to corporate buzz phrase faster than any technology concept in modern history. From boardrooms in Silicon Valley to regulatory proposals in Brussels, companies now trumpet responsible approaches as if ethics were a product feature. Yet this rhetoric often masks what amounts to a race for competitive edge, not societal accountability. This article cuts through the platitudes and reveals a sharp dividing line: one between legitimate governance capable of managing real risk and another that’s essentially ethical marketing, a form of AI greenwashing. To understand who truly owns the narrative of Responsible AI, we must confront both the ideal and the opportunistic currents shaping it.

Who Defines Responsible AI in a Lawless Landscape?

Tech Giants vs. Regulators: A Governance Tug‑of‑War

Responsible AI rhetoric has become a first‑mover claim for dominant tech firms attempting to set standards before regulators can. Corporations release AI principles, ethics boards, and transparency reports to influence the very rules that might constrain them later. This power play shapes what Responsible AI even means in practice, not just in theory. While regulators scramble to draft frameworks, companies like OpenAI and Google publish grooming documents that define their own boundaries. This “self‑standardization” risks conflating corporate preference with public interest.

From Ethics to Excuses: When Frameworks Become Shields

Frameworks can devolve into excuses. Many Responsible AI frameworks echo familiar language; fairness, transparency, accountability, yet lack enforcement. Critics have labeled this practice ethics‑washing as discussed in Harvard Business Review, where lofty statements serve more as PR than practice, similar to what’s documented in corporate social responsibility marketing. Real frameworks require measurable commitments, auditing processes, and mechanisms for redress, not just glossy principles. Without accountability, Responsible AI risks being an attractive shield against criticism rather than a tool to protect stakeholders.

Responsible AI or Risk Avoidance in Legal Advice Basics?

Liability Loopholes and Self‑Policing in AI Products

A central tension in Responsible AI lies in legal advice basics. Many AI products disclaim liability for errors, particularly in sensitive domains like healthcare or finance. Language used in terms of service effectively tells users not to rely on AI for decisions that have real consequences. These disclaimers shift risk onto users and away from creators. True Responsible AI governance would ensure creators retain accountability when their systems lead to harm, rather than outsourcing responsibility through dense legalese.

Legal vs. Ethical AI Approaches

| Focus Area | Legal Advice Basics | Responsible AI Ethics |

| Objective | Avoid lawsuits | Ensure fairness and safety |

| Accountability | Follows current law | Proactive protection of users |

| Enforcement | Contractual disclaimers | Independent audits |

| Impact on Users | Limited recourse | Reparative channels |

Responsible AI as a Legal Branding Strategy

Calling a system “responsible” confers legal advantages. It signals that developers have considered risk, even if no enforceable mechanisms exist. Responsible branding can immunize companies from scrutiny, with critics unable to argue against something that, by label, is already “ethical.” This twists Responsible AI into a marketing strategy rather than a socio‑technical imperative. The question is not just what Responsible AI means, but who gets to define it? corporations or society.

Real world reference McKinsey discusses risk mitigation strategies that companies adopt before regulatory frameworks catch up, often reshaping markets rather than being shaped by policy.

Medical Advice Meets Machine Learning: A Dangerous Symbiosis

Why Responsible AI Is Critical in Healthcare Algorithms

When machines offer medical advice, the stakes are human life. AI systems are increasingly embedded in diagnostic tools, treatment recommendations, and patient triage systems. Without robust Responsible AI principles, these systems can encode bias, prioritize efficiency over safety, or give confident but incorrect guidance. Healthcare governance has historically required rigorous clinical trials validated through independent oversight. Yet many AI‑assisted tools enter the market with minimal transparent evaluation.

How to Use AI Responsibly in Life‑or‑Death Decisions

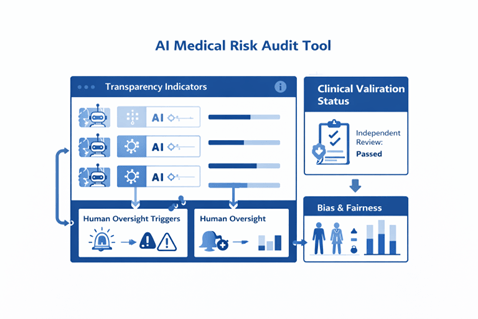

Responsible AI requires more than avoidance of harm; it demands affirmative safeguards. For medical applications, this includes human‑in‑the‑loop decision systems, transparent model explanations, bias detection, and external review panels that include clinicians and ethicists.

Using such a dashboard forces organizations to visualize where responsibility lapses may occur before deployment and where governance must intervene.

MIT Technology Review highlights concerns about bias and validation gaps in clinical AI systems.

Framework Ethics or Just Framework Theater?

The Rise of AI Ethics Committees and Their Limits

AI ethics committees have proliferated, from corporate boards to academic panels. On paper, these groups suggest checks and balances. In reality, many lack enforcement power or independence. Some are advisory only, offering recommendations that can be ignored without consequence. This mirrors critiques of ineffective compliance boards in other industries, where committees exist primarily for optics. Without enforceable authority and transparent reporting, these bodies can resemble theater more than governance.

Why Most AI Framework Ethics Lack Enforcement Teeth

Responsible AI frameworks often lack the infrastructure to enforce their own standards. Principles are published, but few companies tie these principles to performance metrics, compensation incentives, or third‑party audits. Transparency is nominal rather than operational. Real Responsible AI practices would include publicly available audit results, mechanisms for user complaints, and legally binding obligations. Instead, many frameworks remain aspirational, serving branding rather than accountability.

AI Governance Framework vs. Implementation Reality

| Component | On Paper | In Practice |

| Transparency | Public principles | Limited data disclosure |

| Accountability | Ethics committees | No binding authority |

| Third‑Party Review | Suggested | Rare |

| User Redress | Stated in text | Often nonexistent |

The World Economic Forum highlights the challenges in translating ethical frameworks into enforceable norms.

Public Trust or Publicity Stunts? The PR of Responsible AI

Why Consumers Are Misled by AI Responsibility Claims

Companies have a commercial interest in portraying their AI as safe and ethical. This marketing surge exploits public concern about AI risks while providing minimal real protection. Deloitte’s research Trust in technology reveals that many consumers conflate corporate claims of responsibility with actual safety practices, even when evidence is scarce or opaque.

Genuine Governance vs. Cosmetic Compliance

True governance relies on checks, balances, and consequences, not catchphrases. To separate sincere Responsible AI implementations from greenwashing, we must look for concrete indicators: independent audits, open reporting, stakeholder participation, and mechanisms for accountability when harm occurs.

Such visualization forces clarity in accountability rather than ambiguity in branding.

How to Use AI Responsibly: Beyond the Buzzwords

Real Standards Every Company Should Follow

Responsible AI must be actionable, not aspirational. Companies should adopt standards that embed responsibility into development of lifecycles. This includes operational checkpoints, external validation, and user protections. These standards are not optional; they are fundamental to ethical innovation. Accomplishing this requires shifts in organizational incentives, investing in ethical expertise, and building governance structures that match the power of the technology itself.

What Responsible AI Should Look Like in Practice

Below are non‑negotiables for any AI labeled as Responsible AI:

- Open Datasets When Possible: Enables scrutiny and reproducibility

- Transparent Models: Explanations for automated decisions

- Third‑Party Review: Independent auditing by experts

- Human Oversight: Ensure humans remain in critical loops

- Legal Accountability: Legal obligations to compensate harm

If an organization cannot honestly implement these elements, it cannot reasonably claim to pursue Responsible AI. These are practical standards that go beyond buzzwords into governance reality.

AI Will Govern: The Question Is Who Governs It

Responsible AI is more than a slogan. It is the mechanism by which powerful systems interact with society, medicine, law, and individual lives. Too often, the term has been captured by marketing, overselling safety while underserving accountability. The future of AI depends not on how compelling Responsible AI sounds but how enforceable it is. Governance must be transparent, enforceable, and participatory, not cosmetic.

The verdict is clear: if Responsible AI remains defined by the same entities seeking to profit from it, society will see greenwashed governance, no real protections. Accountability must shift toward independent mechanisms, embedded legal responsibilities, and measurable standards. Anything less is not Responsible AI in action; it is Responsible AI in name only.

References

Ethics-Washing in AI Is a Real Danger – Harvard Business Review

What Every CEO Should Know About Generative AI – McKinsey

The AI Medical Revolution – MIT Technology Review

How to Build Trust in AI – Deloitte

From Principles to Practice: AI Governance Challenges – World Economic Forum

Responsible AI Needs Human Oversight Unless It’s Just a Label – H-in-Q