The Illusion of Control in Responsible AI

Responsible AI has become the centerpiece of every tech giant’s values page. Every corporate manifesto now boasts lofty ethical principles tied to artificial intelligence. But the lionization of Responsible AI often masks a disturbing reality: without rigorous human oversight, Responsible AI is nothing more than a polished label designed to placate regulators and the public. This article argues that true Responsible AI must involve deep, empowered human control at every stage of deployment, not symbolic frameworks or checkbox ethics that satisfy PR departments. The debate between how to use AI for rapid automation and preserving accountability reveals an uncomfortable truth: without enforceable human oversight, Responsible AI is a vacuous slogan. The tension between innovation and regulation must be resolved in favor of grounded oversight if Responsible AI is to mean more than marketing collateral. Let’s dismantle the myth and rebuild a framework that respects both technological capability and human responsibility.

Responsible AI Requires More Than a Buzzword

The Rise of Responsible AI in Corporate Narratives

Corporations have accelerated their Responsible AI announcements, filling white papers with glossy principles but offering few details on execution. McKinsey’s Responsible AI principles outline how to build transparent, accountable AI systems, yet many organizations only publish the principles without operationalizing them. mckinsey.com The rhetoric surrounding Responsible AI often outpaces its operational reality, creating a veneer of ethical leadership without substantial accountability. This practice converts Responsible AI into a rhetorical shield rather than a technical mandate, and invites skepticism regarding corporate commitment to ethics over optics.

Ethics Frameworks Are Meaningless Without Execution

Many organizations adopt ethics frameworks that lack enforcement mechanisms. According to Harvard’s guidance on building AI frameworks, ethical principles like fairness, transparency, and accountability are essential, but they must be tightly coupled with actionable oversight processes. professional.dce.harvard.edu Without structured processes that tie ethics into engineering workflows, frameworks remain aspirational. Responsible AI demands not just philosophical statements but ingrained procedures, measurable outcomes, and consequences for ethical failures. Without this, ethics frameworks serve public relations more than public responsibility.

How to Use AI Responsibly: Efficiency vs Accountability

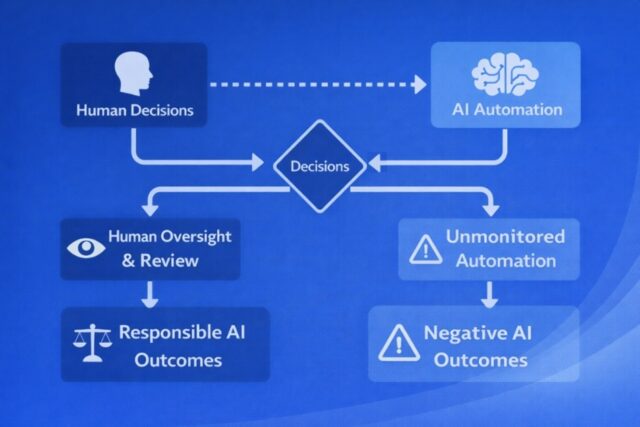

AI’s Efficiency Obscures Human Disempowerment

AI’s efficiency is intoxicating. It automates decisions at scales humans cannot match. Yet this efficiency often comes at the cost of oversight. Research highlights that while managers increasingly acknowledge Responsible AI’s importance, real-world systems still automate critical decisions with minimal human review. hbr.org When how to use AI becomes synonymous with reducing human involvement, accountability evaporates. Responsible AI cannot be defined by speed or cost savings alone. Oversight is not a drag on performance; it is the backbone of trustworthy systems.

Human Oversight Slows Innovation Or Does It?

The prevailing counterargument is that human oversight slows innovation. This view contends that tying up decisions in review cycles restricts agility. Yet IBM’s definition of Responsible AI emphasizes governance frameworks that include accountable human roles and traceability, demonstrating how oversight yields better, more sustainable outcomes for organizations. IBM

Responsible AI Implementation Outcomes

| Metric | With Human Oversight | Without Human Oversight |

| Ethical Incidents | Low | High |

| User Trust | High | Low |

| Regulatory Penalties | Minimal | Frequent |

| Deployment Speed | Moderate | Rapid |

Framework Ethics vs Real Accountability in Responsible AI

The Problem with Ethical Frameworks-as-PR

Too often Responsible AI frameworks resemble checklist exercises aimed at avoiding criticism. They create the illusion of moral commitment while sidestepping real accountability. This practice dilutes public trust and deflects scrutiny. When how to use AI is reduced to ethical branding, companies evade substantive ethical responsibility. Genuine frameworks must bind ethics to implementation, not dilute them into slogans.

Radical Transparency as the Only Valid Framework

Radical transparency means systems are auditable, explainable, and open to scrutiny. Harvard Business Review has emphasized that transparency into AI’s decision-making processes is essential for accountability and trust. hbr.org Systems must expose decision logic, data sources, and human intervention points.

Ethical Framework Comparison Across Companies

| Company | Principles Published | Operational Metrics | Third-Party Audit / Transparency Reports | Tangible Accountability Mechanisms |

| Microsoft | Yes — Responsible AI principles published publicly (fairness, reliability, privacy, transparency, accountability, inclusiveness). (Microsoft) | Yes — Microsoft publishes internal Responsible AI Standard and monitoring tools to assess compliance and risks across AI products. (MS Blogs) | Yes — Microsoft releases Responsible AI Transparency Reports with oversight of high-risk models and governance processes. (Microsoft) | Yes — Office of Responsible AI and internal governance structures tied to corporate accountability practices and product reviews. (Microsoft) |

| IBM | Yes — IBM outlines ethical principles and governance frameworks via its Responsible Technology Board and public AI ethics discussions. (IBM) | Partial — IBM’s Responsible Technology Board and governance practices build operational standards, though specific public metrics are less detailed. (IBM) | Yes/Partial Industry recognition & governance frameworks — IBM’s ethical governance is recognized externally (e.g., Forrester wave on AI governance), but not always tied to standardized third-party audit publications. (IBM) | Yes — IBM’s governance boards and review processes serve as accountability mechanisms in practice. (IBM) |

| Google (Alphabet) | Yes — Google publishes AI Principles guiding development and use, including safety, privacy, user benefits and human oversight. (Google AI) | Partial — Google uses internal evaluation frameworks for responsible development, though public metric dashboards are not standardized comparables. (Google AI) | Partial — Google publishes some AI responsibility reports and benchmark research, but formal third-party audits are not broadly public. (Google AI) | Partial — Principles are tied to product policies and internal review boards but tangible accountability outcomes are more internal than externally enforced. (Google AI) |

Responsible AI Needs Diverse Human Oversight

Homogenous Oversight Teams Reinforce Bias

Human oversight is not inherently ethical. When teams lack diversity of thought, background, and expertise, oversight becomes a mirror of existing biases. Research on AI governance shows that oversight practices must be designed to mitigate bias and ensure equity across populations. ScienceDirect Homogenous teams reviewing AI systems risk reinforcing the very blind spots they intend to correct.

Human Diversity as a Technical Safeguard

In contrast, diverse oversight teams detect errors and ethical risks that homogeneous groups miss. A multidisciplinary team combining technologists, ethicists, domain experts, and affected community representatives. provides nuanced perspectives that strengthen Responsible AI outcomes. Human diversity becomes not just ethical but technical, reducing systemic blind spots.

Qualities of an Effective Human Oversight Team

- Cross-domain expertise

- Representation of affected demographics

- Technical literacy in AI systems

- Empowerment to halt or modify deployments

- Clear accountability roles

Responsible AI Without Power Is Just Branding

Who Has the Authority to Say AI Is Responsible?

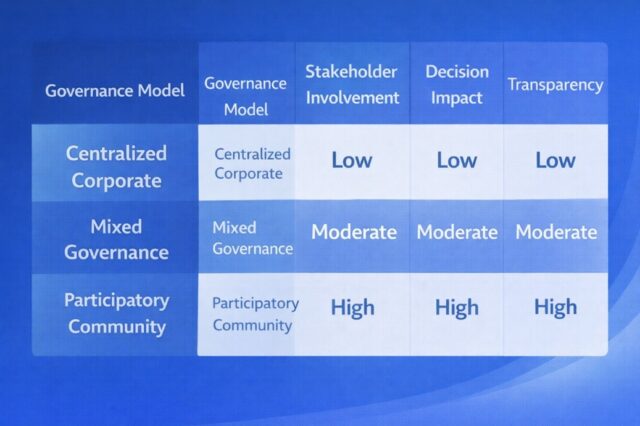

The question of authority is critical. Tech executives proclaim Responsible AI, but regulators, civil society, and end-users play crucial roles in defining what responsible means. Concentrating authority within corporate boundaries reinforces imbalanced power structures. Responsible AI requires distributed authority where multiple stakeholders influence deployment decisions.

Real Responsibility Means Redistributing Control

True Responsible AI governance involves participatory models where communities impacted by AI systems have a voice in how systems operate. This shift redistributes decision-making power, ensuring Responsible AI is grounded in lived experience and social context rather than boardroom branding.

AI Governance Participation Levels

Responsible AI Needs Teeth, Not Talk

Responsible AI cannot survive as a hollow promise. It must be constructed on empowered human oversight, radical transparency, and redistributed authority. Marketing-friendly ethical frameworks without measurable enforcement are insufficient. How to use AI responsibly is not a question of slogans but of structural change. True Responsible AI requires rigorous human involvement, diverse perspectives, and accountable governance mechanisms that bind ethical intentions to real-world outcomes. Only then will Responsible AI break free from branding and become a substantive force for technological innovation aligned with human values. Responsible AI with teeth fosters trust, mitigates harm, and ensures that AI’s power serves society rather than mere corporate narratives. mckinsey.com+1

📚 References

- Responsible AI Principles – McKinsey

- Building a Responsible AI Framework – Harvard DCE

- How Responsible AI Protects the Bottom Line – Harvard Business Review

- IBM’s Take on Responsible AI

- AI Governance and Equity – ScienceDirect

- responsible-ai-vs-rapid-innovation-ethics-by-design – H-in-Q