The Collision Course of Conscience and Code

The explosive pace of AI breakthroughs clashes with mounting demands for moral accountability. As companies and innovators race to deploy next‑generation tools, the concept of Responsible AI becomes more than a buzzword: it becomes a strategic imperative. The tension between speed and ethics frames the core challenge, can we accelerate AI innovation without sacrificing human dignity, safety, or trust? Responsible AI must not be optional window dressing; it needs to be baked into design, not bolted on. This article argues that with the right mindset and “ethics by design,” innovation and integrity can coexist and even reinforce each other.

Why Responsible AI Is More Than Just a PR Strategy

The Commercialization of Ethics in Corporate AI

Many corporations adopt Responsible AI largely as brand insurance a way to signal responsibility without deep structural change. In such cases, ethics becomes marketing, cloaked in lofty mission statements but lacking real follow‑through. That kind of superficial adoption turns framework ethics into PR theater rather than operational discipline.

Framework Ethics: Governance or Performance Theater?

True ethics frameworks demand governance not just promises. That means embedding oversight, accountability, transparency, and explainability into development pipelines. Without this backbone, ethics pledges amount to little more than moral posturing. According to institutional definitions, Responsible AI involves ethical and legal safeguards to guard against bias, unfairness, and misuse. (iso.org)

| Corporate Promise Type | Typical Features | Risks if Unenforced |

| Ethics as branding | Mission statements, public pledges | Ethics washing, loss of trust |

| Ethics by design & governance | Internal policies, oversight, audits | Slower rollout, higher upfront cost |

This comparison shows that not all commitments labeled “Responsible AI” are equal. Without structure and follow‑through, such pledges collapse under scrutiny.

The Innovation Dilemma: Is Responsible AI Slowing Us Down?

Agile Sprints vs Ethical Roadblocks

Modern AI development often aims for rapid iteration: minimal viable product, fast deployment, learning from feedback. But ethical constraints like safety reviews, bias audits, transparency requirements, introduce friction. Critics argue that Responsible AI slows progress and discourages bold experimentation.

Healthcare AI: Delayed or Protected by Ethics?

In sectors like healthcare, where AI may offer diagnostics, treatment suggestions, or medical advice, oversight is even more stringent. Tight ethics controls can lengthen approval cycles, potentially delaying benefits to patients. But that delay may be preferable to releasing biased, unsafe AI systems that could cause harm.

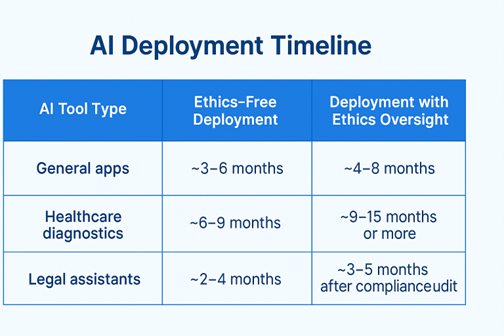

Time to Market: Ethics‑Aware vs Ethics‑Free AI Tools

The dashboard highlights the trade-off: ethics review slows time-to-market. Yet in critical domains such as health, legal advice basics, social impact, slower deployment may be the responsible approach.

Still, critics argue that ethical oversight can kill momentum, especially for early‑stage projects with limited resources. Others contend that such friction is the price of safety.

Responsible AI in Medicine: Guardian or Gatekeeper?

Can AI Be Trusted to Give Medical Advise Responsibly?

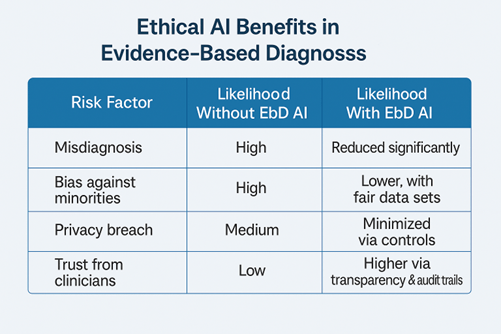

AI-driven systems promise to transform healthcare by offering diagnostic support, personalized treatment suggestions, and medical advice tailored to individual conditions. But without guardrails, these systems risk misdiagnosis, bias (especially on underrepresented populations), and violation of patient privacy. Responsible AI aims to embed safety, privacy, and fairness into design so that AI supports clinicians, rather than undermining them. (European Commission)

Overregulation and the Death of Innovation

Opponents warn that heavy-handed ethics controls like lengthy compliance checks, data governance requirements, robust audits, slow or even block beneficial medical AI innovations. In competitive industries, delays may lead to loss of funding or abandonment of promising projects. In some cases, life‑saving AI tools could remain forever in lab drawers under the weight of compliance hurdles.

Risk vs Reward in Medical AI under Responsible AI

The tool shows that embedding Responsible AI principles like fairness, privacy, accountability, strongly mitigates risks, even though it may slow rollout.

AI and the Law: When Responsible AI Meets Legal Advice Basics

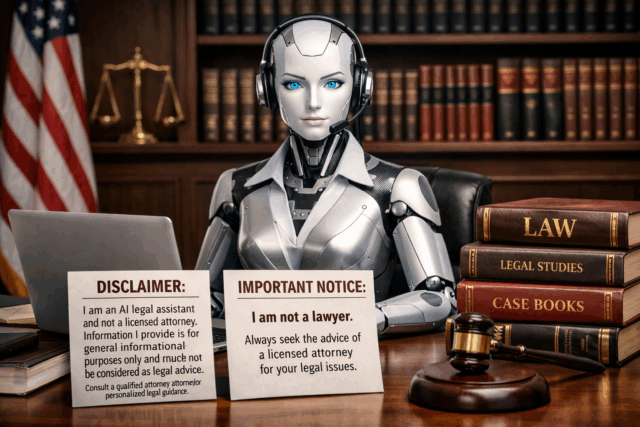

AI’s Legal Advice Basics and the Ethics Backlash

Increasingly, AI tools claim to provide legal counsel: contract review, rights advisories, legal advice basics for lay users. Without rigorous controls, these tools risk producing misleading or incorrect guidance, potentially causing legal harm. Responsible AI in the legal domain means enforcing clarity, disclaimers, transparency, and accountability not letting unregulated systems act as pseudo‑lawyers. (ibm.com)

Accountability Vacuum: Who’s Liable When AI Advises Wrongly?

If an AI system errs; misguides a user, omits legal nuance, or misinterprets statutes, who bears responsibility? The developer, the deployer, or the user? Without ethical and legal guardrails, liability becomes a grey area. Responsible AI frameworks establish accountability through oversight, auditability, and traceability. That helps ensure that when mistakes happen, there’s clarity about who must answer for them. (unesco.org)

- Liability triggers in AI‑generated legal content:

- Omission of jurisdiction-specific regulations

- Lack of transparency about training data or limitations

- Absence of disclaimers or human oversight

- Failure to provide appeal or review mechanisms

These triggers highlight why Responsible AI is critical when AI offers basic legal advice, it’s not enough to build a model; the model must live within a governance and accountability structure.

Ethics by Design: Idealistic Theory or Practical Strategy?

Embedding vs Retrofitting: The Real Cost of Ethical Engineering

Ethics by design means integrating ethical considerations as privacy, fairness, transparency, accountability, from the very start of AI system development. Waiting until after deployment to patch in ethics (retrofitting) often proves inefficient, costly, and unreliable. The concept of “Ethics by Design” (or EbD‑AI) argues for making ethical guardrails a core part of the engineering workflow. (SpringerLink)

Who Owns the Ethics Layer? Developers or Regulators?

Embedding ethics in AI raises a fundamental question: who bears responsibility for ethical compliance; the developers building the system, or external regulators and governance bodies? EbD‑AI suggests putting much of the onus on developers, equipping them with actionable tasks to embed human‑rights aligned values. This bridges the gap between ethical theory and technical practice. (SpringerLink)

| Approach | Benefits | Limitations / Risks |

| Ethics-by-design (EbD) | Early alignment with values, lower long-term risk, built-in accountability | Requires developer buy-in, can slow iteration |

| Retroactive auditing | Flexibility, faster initial deployment | High risk of bias/errors, expensive fixes |

Design-time ethics integration proves more sustainable. Retroactive audits may catch problems, but often too late.

When Framework Ethics Collide with Market Pressures

Startups, Burn Rate, and Responsible AI Tradeoffs

For startups racing to launch, build MVPs, and attract funding, ethics compliance can feel like a luxury. Lean teams may skip rigorous design-phase ethics to move faster. For many early-stage ventures, the overhead of Responsible AI ; audits, governance, transparent documentation, may seem unsustainable. Thus, Responsible AI becomes something only large enterprises with resources can afford.

Investor Incentives: Ethics or Exit Strategy?

Venture capital and investor expectations frequently prioritize fast growth and disruption. Pressure to deliver market value quickly incentivizes minimal viable products ; not robust, ethics-compliant systems. Until investors value “ethics as strategy,” many promising AI projects will lean into speed over responsibility, putting users and society at risk.

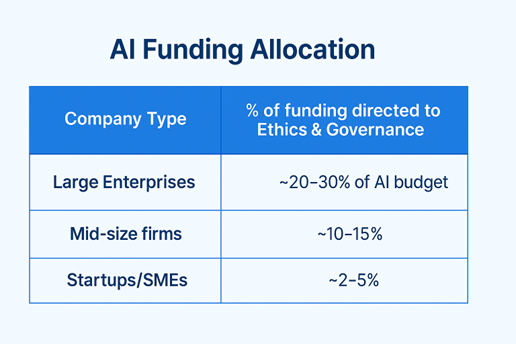

Dashboard – Funding Allocation to Responsible AI by Company Size

This allocation reflects reality: high ethics investment tends to appear where resources are ample; for early-stage ventures, ethics is often marginal.

Responsible AI or Irresponsible Delay?

Embedding ethics into AI (through Responsible AI and Ethics by Design) is a structural accelerator for sustainable innovation. Speed without integrity leads to fracture: bias, regulatory backlash, loss of trust. Yet a well-implemented ethics framework preserves agility while safeguarding human values. Innovation and ethics are not opposites; they are co‑founders of the future. Companies that treat Responsible AI as an integral strategy will not just survive; they will lead. The future of AI belongs to those who build it fast, but build it right.

References

- Ethical Principles for Artificial Intelligence | UNESCO

- The Problem with AI Ethics Frameworks | Harvard Business Review

- Ethics by Design and Ethics of Use Approaches | European Commission

- What to Know About AI and the Law | McKinsey

- AI Ethics: Balancing Innovation and Regulation | MIT Technology Review

- Ethics and AI: Where Responsibility Begins | IBM

- Embedding Ethics into AI Systems | SpringerLink

- Self-Regulation or State Regulation? Who Sets the Boundaries for AI?